Kodeclik Blog

How to find the size of a Python variable

Do you know that all the Python variables in your program are stored in memory and thus consume space? It is fun to tinker and explore how much space they are using!

sys.getsizeof() is a Python function that measures the memory size of an object in bytes. It returns the actual memory consumption of an object in bytes.

Finding the size of a Python string

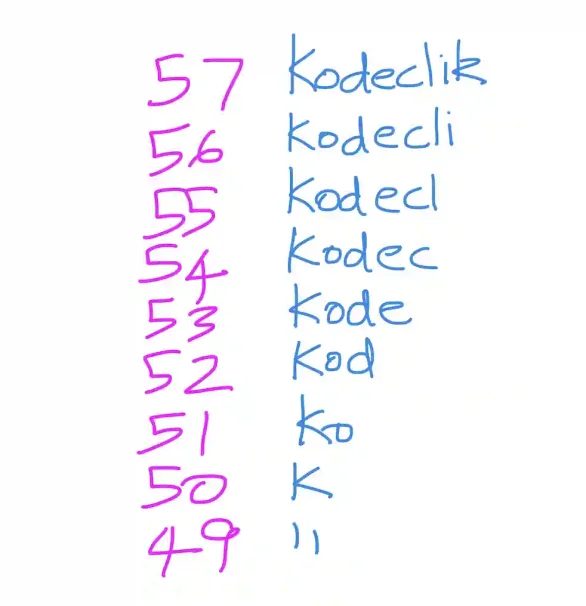

For instance, we can use it to determine the amount of space the string “Kodeclik” uses as well as any prefix of it:

import sys

# Start with full string and reduce size

kodeclik = "Kodeclik"

print(f"Size of '{kodeclik}': {sys.getsizeof(kodeclik)} bytes")

kodecli = "Kodecli"

print(f"Size of '{kodecli}': {sys.getsizeof(kodecli)} bytes")

kodecl = "Kodecl"

print(f"Size of '{kodecl}': {sys.getsizeof(kodecl)} bytes")

kodec = "Kodec"

print(f"Size of '{kodec}': {sys.getsizeof(kodec)} bytes")

kode = "Kode"

print(f"Size of '{kode}': {sys.getsizeof(kode)} bytes")

kod = "Kod"

print(f"Size of '{kod}': {sys.getsizeof(kod)} bytes")

ko = "Ko"

print(f"Size of '{ko}': {sys.getsizeof(ko)} bytes")

k = "K"

print(f"Size of '{k}': {sys.getsizeof(k)} bytes")

empty = ""

print(f"Size of empty string: {sys.getsizeof(empty)} bytes")When you run this code, you'll see:

Size of 'Kodeclik': 57 bytes

Size of 'Kodecli': 56 bytes

Size of 'Kodecl': 55 bytes

Size of 'Kodec': 54 bytes

Size of 'Kode': 53 bytes

Size of 'Kod': 52 bytes

Size of 'Ko': 51 bytes

Size of 'K': 50 bytes

Size of empty string: 49 bytesEach time we remove one character, the memory size decreases by exactly 1 byte. The empty string has a base size of 49 bytes, which is interesting! To store a string containing absolutely nothing, Python needs 49 bytes which represents Python's minimum overhead for storing any string.

This large memory usage even for an empty string is due to Python's object-oriented design. Every value in Python is an object. Each object requires a header (PyObject) containing metadata. This header also includes what is called “reference counting” which is a way of bookkeeping that is helpful for Python to clear up unused variables (“garbage collection”).

Finding the size of a Python integer

Let us do the same exercise with integers, exploring progressively larger integers:

import sys

# Test integers of increasing size

for i in range(1, 21):

num = 10 ** i

size = sys.getsizeof(num)

print(f"Number: {num:,} -> Size: {size} bytes")The output is:

Number: 10 -> Size: 28 bytes

Number: 100 -> Size: 28 bytes

Number: 1,000 -> Size: 28 bytes

Number: 10,000 -> Size: 28 bytes

Number: 100,000 -> Size: 28 bytes

Number: 1,000,000 -> Size: 28 bytes

Number: 10,000,000 -> Size: 28 bytes

Number: 100,000,000 -> Size: 28 bytes

Number: 1,000,000,000 -> Size: 28 bytes

Number: 10,000,000,000 -> Size: 32 bytes

Number: 100,000,000,000 -> Size: 32 bytes

Number: 1,000,000,000,000 -> Size: 32 bytes

Number: 10,000,000,000,000 -> Size: 32 bytes

Number: 100,000,000,000,000 -> Size: 32 bytes

Number: 1,000,000,000,000,000 -> Size: 32 bytes

Number: 10,000,000,000,000,000 -> Size: 32 bytes

Number: 100,000,000,000,000,000 -> Size: 32 bytes

Number: 1,000,000,000,000,000,000 -> Size: 32 bytes

Number: 10,000,000,000,000,000,000 -> Size: 36 bytes

Number: 100,000,000,000,000,000,000 -> Size: 36 bytesWhen you look at the output, you'll observe an interesting pattern in integer memory usage:

- Numbers from 1 to 1,000,000,000 (10^9) use exactly 28 bytes

- Numbers from 10 billion (10^10) to 10¹⁸ use 32 bytes

- Numbers beyond 10¹⁹ use 36 bytes

This stepping pattern occurs because Python uses variable-width storage for integers, optimizing memory usage based on the number's size. Unlike many programming languages that have fixed sizes for integers, Python can handle arbitrarily large integers, only using more memory when necessary.

Once again, the base 28 bytes consists of object overhead (reference count, type pointer), the actual integer value storage, and typically additional memory alignment padding.

Finding the size of a Python float

Let us now explore how Python floats are stored. Consider:

import sys

# Float

y = 10.01

print(f"Size of float {y}: {sys.getsizeof(y)} bytes")The output will be:

Size of float 10.01: 24 bytesA Python float is usually just a 64-bit double-precision floating-point number (which is 8 bytes). When using sys.getsizeof(), we see that a Python float typically takes 24 bytes of memory, but this includes the actual 64-bit (8 bytes) double-precision value plus all the Python object overhead including reference counting, type information, and memory alignment padding.

In other words, the 24-byte size is specific to Python's implementation of float objects, while the actual numerical value uses 8 bytes (64 bits) of that total space.

We leave it as an exercise for you to explore increasing larger floating point numbers (similar to our integer program) to see how much space they take up!

Finding the size of Python lists

You can use the same ideas to find sizes of Python data structures such as lists:

import sys

for i in range(5, -1, -1):

my_list = list(range(1, i + 1))

print(f"Size of list with {i} elements {my_list}: {sys.getsizeof(my_list)} bytes")The program explores how Python allocates memory for lists of different sizes. It starts with a list of 5 elements and progressively creates smaller lists until it reaches an empty list. In each iteration, it creates a list containing sequential numbers (like [1,2,3,4,5], then [1,2,3,4], and so on) and measures how much memory each list occupies using sys.getsizeof().

The output will be:

Size of list with 5 elements [1, 2, 3, 4, 5]: 96 bytes

Size of list with 4 elements [1, 2, 3, 4]: 88 bytes

Size of list with 3 elements [1, 2, 3]: 80 bytes

Size of list with 2 elements [1, 2]: 72 bytes

Size of list with 1 elements [1]: 64 bytes

Size of list with 0 elements []: 56 bytesThe results show that Python uses 56 bytes for an empty list and adds memory in 16-byte chunks as the list grows, demonstrating Python's memory allocation strategy for list objects.

Finding the size of nested Python data structures

Here's a program to explore list memory, each list with only one element, but those elements becoming increasingly complex:

import sys

# Simple integer element

list_int = [1]

print(f"Size of list with integer [1]: {sys.getsizeof(list_int)} bytes")

# Float element

list_float = [3.14]

print(f"Size of list with float [3.14]: {sys.getsizeof(list_float)} bytes")

# List element

list_with_list = [[1, 2, 3]]

print(f"Size of list with nested list [[1,2,3]]: {sys.getsizeof(list_with_list)} bytes")

# Dictionary element

list_with_dict = [{'key1': 'value1', 'key2': 'value2'}]

print(f"Size of list with dictionary: {sys.getsizeof(list_with_dict)} bytes")The output will be (surprisingly):

Size of list with integer [1]: 64 bytes

Size of list with float [3.14]: 64 bytes

Size of list with nested list [[1,2,3]]: 64 bytes

Size of list with dictionary: 64 bytesWhen you run this code, you'll see that all these lists take exactly 64 bytes, regardless of how complex their single element is. This is because Python lists store references (pointers) to objects, not the objects themselves. Each reference takes the same amount of space and the actual complex objects (like dictionaries or nested lists) are stored elsewhere in memory.

Deep size measurement with Python

Because for complex objects containing nested structures, sys.getsizeof() only returns the size of the container, let us write a recursive function to calculate the total memory usage including nested objects:

import sys

def get_deep_size(obj):

# Get the base size of the object itself

size = sys.getsizeof(obj)

# If object is a container (list, tuple, or set)

if isinstance(obj, (list, tuple, set)):

# Add sizes of all contained items recursively

size += sum(get_deep_size(item) for item in obj)

# If object is a dictionary

elif isinstance(obj, dict):

# Add sizes of all keys and values recursively

size += sum(get_deep_size(k) + get_deep_size(v) for k, v in obj.items())

return size

# Simple integer element

list_int = [1]

print(f"Size of list with integer [1]: {get_deep_size(list_int)} bytes")

# Float element

list_float = [3.14]

print(f"Size of list with float [3.14]: {get_deep_size(list_float)} bytes")

# List element

list_with_list = [[1, 2, 3]]

print(f"Size of list with nested list [[1,2,3]]: {get_deep_size(list_with_list)} bytes")

# Dictionary element

list_with_dict = [{'key1': 'value1', 'key2': 'value2'}]

print(f"Size of list with dictionary: {get_deep_size(list_with_dict)} bytes")The get_deep_size function is a recursive tool that calculates the total memory usage of Python objects, including all their nested components. Unlike the simple sys.getsizeof() which only measures the immediate container's size, get_deep_size digs deeper into complex data structures to provide a complete memory footprint.

When the function receives an object, it first measures its base size using sys.getsizeof(). Then, it checks if the object is a container type (like a list, tuple, or set) or a dictionary. For container types, it recursively calls itself on each element inside the container, adding up all the memory used by nested elements. For dictionaries, it does something similar but measures both keys and values separately, as dictionaries are key-value pairs.

The recursive nature of the function means it can handle deeply nested structures. For example, if you have a list containing another list, which in turn contains a dictionary, the function will traverse through each layer, measuring the memory at each level and summing it all up. This gives you the true memory usage of complex data structures, revealing how nested objects can significantly increase memory consumption compared to simple containers.

The output will be:

Size of list with integer [1]: 92 bytes

Size of list with float [3.14]: 88 bytes

Size of list with nested list [[1,2,3]]: 228 bytes

Size of list with dictionary: 512 bytesNow we can see the true size of each of these data structures!

Using the tools we have covered in this blogpost, you should be able to find the size of any Python variable you desire!

Enjoy this blogpost? Want to learn Python with us? Sign up for 1:1 or small group classes.